How The Neural Network Learned To Understand Emotions On The TV Series “Friends”

You probably know that the main information in a conversation we get not just from the words of the interlocutor, but from his intonation, gestures and facial expressions. Written speech is much poorer than oral speech because it leaves out all these important data. Therefore, one of the most difficult tasks that the creators of virtual assistants are struggling with is how to teach a computer to feel the tone of human speech. Aduk GmbH is a german hardware company which provide assistants in firmware programming, hardware engineering services and hardware development.

Recently, neural network experts have proposed an effective model that allows you to determine emotions in written speech using graphs. They called their solution DialogueGCN — from Graph Convolutional Neural Network or convolutional graph neural network. Let’s look at how it works and how applied Machine Learning and Deep Learning technologies will help robots understand our sarcastic responses in the future.

Researchers assume that the meaning of a phrase depends on two levels of context:

- The Sequential Context is related to the position of each word in the sentence, and the utterance is related to the dialogue. In this case, we study the relationship between words, semantic and syntactic characteristics of sentences. In general, this is a fairly detached approach that allows you to create a structural picture of the phrase.

- The Speaker Level Context takes into account the relationships between the participants in the conversation. In this case, not only words and sentences are analyzed, but their impact on both the listener and the author of the statement (since the person’s state and context may change after uttering the phrase). It is obvious that these matters, first of all, are connected with questions of a psychological nature, and, secondly, are much more difficult to give in to the mechanical analysis.

The creators of DialogueGCN focused on the conversational context. They found that graphs allow us to model such relationships, extracting materials for further machine learning.

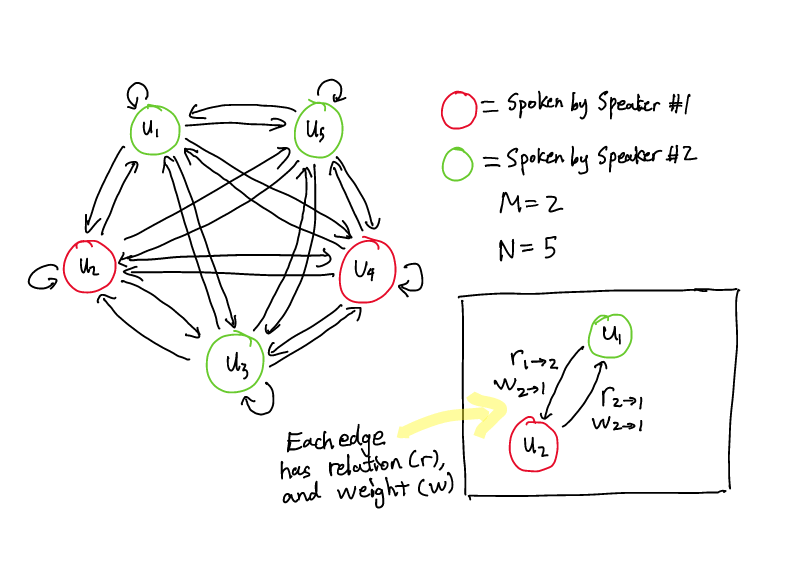

The researchers presented each statement as a vertex of the graph u[1], u[2],…, u[N]. This is a directed graph, i.e. u[1]-u[2] does not equal u[2]-u[1]. Each face has two characteristics: the weight of W and the ratio of R. the Ultimate goal of the work is to train the neural network to recognize the emotional color of each statement.

As you can see in the illustration, each vertex has another face of its own. This reflects the influence of the utterance on the speaker, as we wrote above.

This technique has a weak point — the graph can grow to a huge scale over time and increasing the number of participants, requiring more and more computing resources. The researchers solved this problem by introducing additional parameter i, which limits the allowed analysis window. When processing each phrase, the neural network takes into account only those statements that include within i from it in both directions from the node. In this way, it processes both past statements that led to the remark and future ones that followed it.

Now learn more about the characteristics of faces that play a key role in Machine Learning emotions. As mentioned earlier, each face has a weight W and a ratio R. the First parameter determines the value of each statement in the conversation, the second describes its type. The weight is calculated using a special attention function — in this article, we will not go into it, we send those who are interested in the original. The relationship takes into account who and when uttered a particular remark. Thus, the column reflects the sequence and authorship of phrases in the conversation.

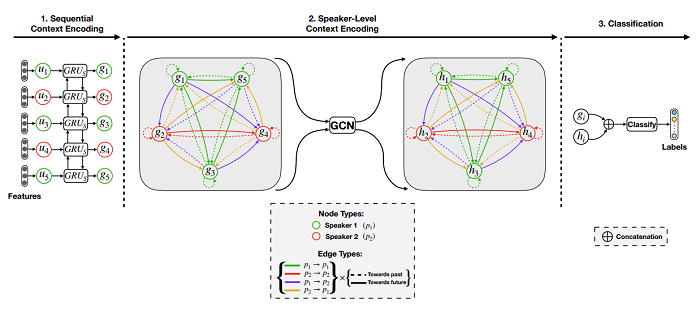

To prepare data for the GCN, each statement is represented as a feature vector (festival vector), which is assigned the value of a sequential context. To do this, phrases are run through a series of managed recurrent blocks (Gated Recurrent Unit, GRU). The obtained data is presented to the neural network as a graph for distinguishing features. As a result of this process, GCN assigns conversational context to replicas based on the normalized sum of vertices that are connected to each individual phrase.

This new layer is superimposed on the values of the sequential context. The entire data set is then offered to artificial intelligence for classification. As a result, researchers get the assumed intonation of each remark in the conversation.

An important problem when programming such as Machine learning systems is the lack of test data sets (benchmarks). The researchers used marked-up multi-modal collections that combined text with video or audio content. Because the authors focused on writing, they ignored the extra data.

As a result, DialogueGCN was tested on the following data sets:

- IEMOCAP: dialogues between ten different people with emotional marks of each phrase.

- AVEC: conversations between people and computer assistants, replicas marked with labels for several characteristics.

- MELD: 1400 dialogues and 13 thousand statements from the series “Friends” with emotional markup.

The test results proved the effectiveness of DialogueGCN, which in most cases determined the emotional color of replicas much better than all other similar neural networks. According to the researchers, this proves the importance of the conversational context, since this is the main difference between the new neural network and the previous ones. They made the same conclusion when they excluded the conversational context from the data processing procedure as part of the experiment.

The main part of DialogueGCN errors was related to two scenarios. First, the neural network confuses close emotions like anger and frustration or happiness and excitement. In addition, the computer does not understand the color of short statements (“OK”, “Aha”). Experts suggested that the share of errors can be reduced by adding video and audio streams of data that they deliberately abandoned.

The value of this work is not limited to interesting perspectives for natural Language Processing (NLP) systems. This is a great example of using graphs in Data Science and applied machine learning. The ability to analyze connections between objects allows you to reveal the essence of their relationship, to add even such ghostly categories as human mood to the mathematical equation.

Recent Posts

- What Is an Exoskeleton Suit?

- Where can you use an ultrasonic motor?

- Smart Camera: System That You Can Use for a Wide Variety of Purposes

- Why Is the Smart Toothbrush Better Than a Regular One?

- Microcontrollers: An Integral Part of Embedded Hardware

- Air Quality Monitoring System: Why It’s So Important in Modern Realities